| S3's Transform and Lighting Engine

White Paper Courtesy of S3 |

||||

|

Why hardware geometry? Without a doubt, the most asked question. Why should S3 be adding complexity and cost to 3D parts by adding geometry capabilities? Hardware Geometry offloads the CPU With the advent of hardware geometry, 3D cards are now capable of rendering entire scenes with little or no CPU intervention, which will revolutionize the 3D applications industry. Instead of spending 50-95% of its time moving and transforming data, static geometry can be prepositioned in AGP or video memory, and fetched directly by the 3D engine with massive bandwidth. As well as saving CPU cycles, this technique significantly reduces system memory bus traffic, which reduces competition for the same memory being accessed across AGP. Even if the static geometry is in AGP memory, system memory bandwidth requirements are reduced by a minimum of a factor of 2, and may reach a factor of 4 or more. Finally, dynamic geometry still gets the transformation benefits, without the bandwidth improvements, for a 40-50% speed enhancement. This is very important to game developers in particular, who are urgently seeking more CPU time for the powerful, CPU-hungry artificial intelligence (AI) and physics engines currently in development. According to Jason Denton, Developer for UK-based Rage Software, "We can never have enough spare CPU time for physics engines and, even if I was able to use every CPU cycle possible, I still wouldn't be able to do everything I want!" Why use hardware geometry when texture-based lighting is available? Rapidly emerging as an extremely important technology in recent years, texture-based lighting (lighting maps) has shown an amazing ability to render realistic looking scenes. A powerful tool that we expect to continue to be very important, light-mapping is a technique that is complemented, not eliminated, by hardware lighting support. Lightmaps are excellent for generating static lighting solutions as offline-rendering engines can use slow radiosity-based lighting engines that produce very realistic results, but take excessive time. However, lighting maps don't produce particularly good dynamic lighting solutions. The effects produced are CPU-intensive, tend to be restricted to casting unrealistic spheres of light around objects and the 'grid' of the lightmap texture is often visible. Dynamically relighting an entire world - for example, changing the lighting when a light source is destroyed - are very difficult problems to solve.

Fig 4. Lightmap aliasing problems in Quake 2. Note the jagged edges around the light sphere cast on the ground by the firing of the gun. |

Hardware lighting allows dynamic lights to be easily and quickly calculated by the 3D engine in the process of rasterization, and their effect can be added to that of the lightmap using the standard multitexture pipelines. The multitexture equation for generating accurate lightmap static/hardware lit dynamic lighting is: ({diffuse + lightmap} * base_texture) + specular. This is easily programmed into any Direct3D compliant multitexturing hardware using only two texture stages.

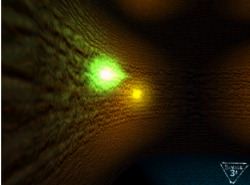

Fig. 5, lightmaps with dynamic diffuse lighting The wall - a lightmapped surface - is brightened by vertex dynamic lighting. (Screenshot taken from the '24bit' demo in S3's SDK3.0) Hardware vertex lighting is also the first step towards consumer 3D accelerators that support per-pixel (often mistakenly called 'Phong') lighting in hardware - an approach that should be able to provide much better results than multitextured solutions. Introducing hardware vertex lighting to developers now will create a solid base of applications that support hardware lighting and are ready to take the next step into photorealistic 3D. What about faster CPU's with 3D-optimized instruction sets? While the introduction of technology designed to accelerate 3D transform and lighting operations from Intel and AMD has significantly increased the ability of the CPU to handle geometry tasks, a 3D hardware geometry engine still has a very valuable part to play. In a typical case, the number of CPU cycles taken to generate a vertex on these 3D-enhanced processors has been reduced by a factor of 3-4. Yet, as the CPU has to use the same bus to connect with system memory and the 3D chipset, feeding the data to the 3D chip was already a significant performance bottleneck before the introduction of transform enhancements. The ideal situation is for the chip to feed itself by picking up its own geometry data from video memory or AGP memory, without ever being touched by the CPU. New instruction sets make minor enhancements by better prefetching for reads, but are fundamentally restricted by the available data bandwidth across memory buses. As a result these enhanced mathematics engines on the CPU can be used for the more general tasks for which they are better suited; physics, AI, dynamic modeling, procedural texture generation. Bottom line, placing geometry on the 3D part frees up the imagination of the programmer. Go to Part IV

|

|||

|

Copyright © 1997 - 2000 COMBATSIM.COM, INC. All Rights Reserved. Last Updated September 15th, 1999 |

||||